PrecisionFDA

Truth Challenge

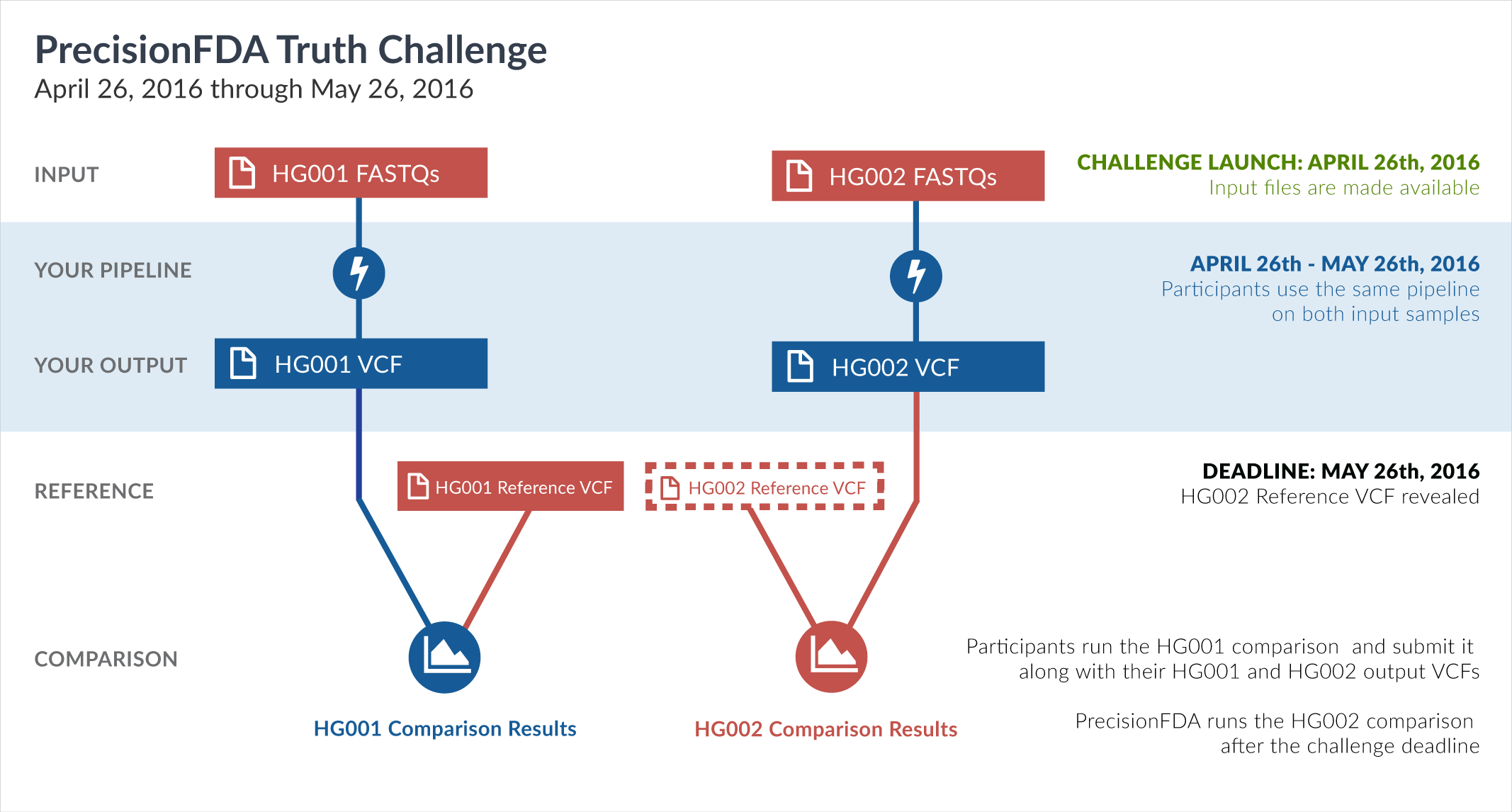

Engage and improve DNA test results with our community challenges

Explore HG002 comparison results

Use this interactive explorer to filter all results across submission entries and multiple dimensions.

| Entry | Type | Subtype | Subset | Genotype | F-score | Recall | Precision | Frac_NA | Truth TP | Truth FN | Query TP | Query FP | FP gt | % FP ma | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

77951-78000 / 86044 show all | |||||||||||||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_51to200bp_gt95identity_merged | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_51to200bp_gt95identity_merged | homalt | 100.0000 | 100.0000 | 100.0000 | 44.9657 | 481 | 0 | 481 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt101bp_gt95identity_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 80.0000 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt101bp_gt95identity_merged | homalt | 100.0000 | 100.0000 | 100.0000 | 62.1205 | 786 | 0 | 786 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt51bp_gt95identity_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 75.0000 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt51bp_gt95identity_merged | homalt | 100.0000 | 100.0000 | 100.0000 | 67.6126 | 525 | 0 | 525 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_51to200bp_gt95identity_merged | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_gt200bp_gt95identity_merged | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_gt200bp_gt95identity_merged | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_gt200bp_gt95identity_merged | hetalt | 0.0000 | 0.0000 | 0.0000 | 0 | 0 | 0 | 0 | 0 | |||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_gt200bp_gt95identity_merged | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt101bp_gt95identity_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 90.9091 | 2 | 0 | 2 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt51bp_gt95identity_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 89.4737 | 2 | 0 | 2 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_all_gt95identity_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 92.3077 | 3 | 0 | 3 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_Human_Full_Genome_TRDB_hg19_150331_all_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 85.1485 | 15 | 0 | 15 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_diTR_11to50 | hetalt | 100.0000 | 100.0000 | 100.0000 | 95.2381 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_diTR_51to200 | * | 84.4444 | 73.0769 | 100.0000 | 96.8333 | 19 | 7 | 19 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_diTR_51to200 | het | 78.5714 | 64.7059 | 100.0000 | 97.4239 | 11 | 6 | 11 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_diTR_51to200 | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_diTR_51to200 | homalt | 94.1176 | 88.8889 | 100.0000 | 95.3216 | 8 | 1 | 8 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_homopolymer_6to10 | hetalt | 100.0000 | 100.0000 | 100.0000 | 66.6667 | 5 | 0 | 5 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_homopolymer_gt10 | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_homopolymer_gt10 | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_homopolymer_gt10 | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_homopolymer_gt10 | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_quadTR_11to50 | hetalt | 100.0000 | 100.0000 | 100.0000 | 64.2857 | 5 | 0 | 5 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_quadTR_11to50 | homalt | 99.9819 | 99.9637 | 100.0000 | 35.9582 | 2757 | 1 | 2757 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_quadTR_51to200 | het | 77.9661 | 63.8889 | 100.0000 | 90.4959 | 23 | 13 | 23 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_quadTR_51to200 | hetalt | 0.0000 | 0.0000 | 0.0000 | 0 | 0 | 0 | 0 | 0 | |||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_quadTR_gt200 | * | 0.0000 | 0.0000 | 0.0000 | 0 | 0 | 0 | 0 | 0 | |||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_quadTR_gt200 | het | 0.0000 | 0.0000 | 0.0000 | 0 | 0 | 0 | 0 | 0 | |||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_quadTR_gt200 | hetalt | 0.0000 | 0.0000 | 0.0000 | 0 | 0 | 0 | 0 | 0 | |||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_quadTR_gt200 | homalt | 0.0000 | 0.0000 | 0.0000 | 0 | 0 | 0 | 0 | 0 | |||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_triTR_11to50 | hetalt | 0.0000 | 100.0000 | 0 | 1 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_triTR_11to50 | homalt | 99.9237 | 99.8474 | 100.0000 | 34.6480 | 1309 | 2 | 1309 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_triTR_51to200 | * | 100.0000 | 100.0000 | 100.0000 | 98.2456 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_triTR_51to200 | het | 100.0000 | 100.0000 | 100.0000 | 97.7778 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_triTR_51to200 | hetalt | 0.0000 | 0.0000 | 0.0000 | 0 | 0 | 0 | 0 | 0 | |||

| raldana-dualsentieon | SNP | tv | lowcmp_SimpleRepeat_triTR_51to200 | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | SNP | tv | map_l125_m0_e0 | hetalt | 100.0000 | 100.0000 | 100.0000 | 75.0000 | 9 | 0 | 9 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | map_l125_m1_e0 | hetalt | 96.5517 | 93.3333 | 100.0000 | 67.4419 | 28 | 2 | 28 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | map_l125_m2_e0 | hetalt | 96.5517 | 93.3333 | 100.0000 | 72.2772 | 28 | 2 | 28 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | map_l125_m2_e1 | hetalt | 96.5517 | 93.3333 | 100.0000 | 72.5490 | 28 | 2 | 28 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | map_l150_m0_e0 | hetalt | 100.0000 | 100.0000 | 100.0000 | 88.8889 | 3 | 0 | 3 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | map_l150_m1_e0 | hetalt | 94.7368 | 90.0000 | 100.0000 | 74.2857 | 18 | 2 | 18 | 0 | 0 | ||

| raldana-dualsentieon | SNP | tv | map_l150_m2_e0 | hetalt | 94.7368 | 90.0000 | 100.0000 | 77.2152 | 18 | 2 | 18 | 0 | 0 | ||