PrecisionFDA

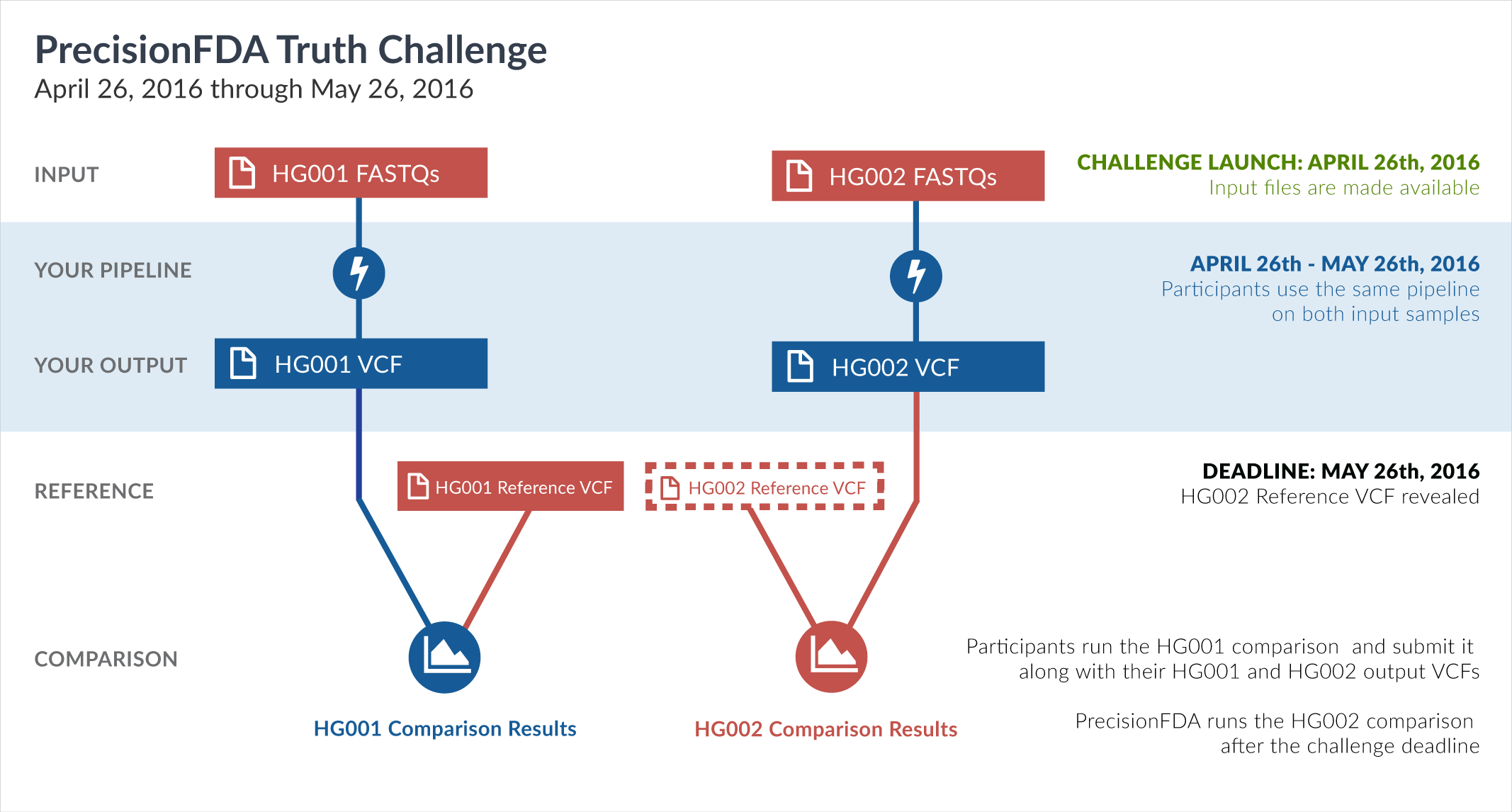

Truth Challenge

Engage and improve DNA test results with our community challenges

Explore HG002 comparison results

Use this interactive explorer to filter all results across submission entries and multiple dimensions.

| Entry | Type | Subtype | Subset | Genotype | F-score | Recall | Precision | Frac_NA | Truth TP | Truth FN | Query TP | Query FP | FP gt | % FP ma | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

77651-77700 / 86044 show all | |||||||||||||||

| raldana-dualsentieon | INDEL | I1_5 | map_l150_m0_e0 | hetalt | 80.0000 | 66.6667 | 100.0000 | 96.7742 | 2 | 1 | 2 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | map_l150_m1_e0 | hetalt | 94.1176 | 88.8889 | 100.0000 | 94.4828 | 8 | 1 | 8 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | map_l150_m2_e0 | hetalt | 94.1176 | 88.8889 | 100.0000 | 95.2663 | 8 | 1 | 8 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | map_l150_m2_e1 | hetalt | 94.7368 | 90.0000 | 100.0000 | 94.8571 | 9 | 1 | 9 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | map_l250_m0_e0 | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I1_5 | map_l250_m0_e0 | homalt | 100.0000 | 100.0000 | 100.0000 | 96.2185 | 9 | 0 | 9 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | map_l250_m1_e0 | hetalt | 100.0000 | 100.0000 | 100.0000 | 97.4359 | 2 | 0 | 2 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | map_l250_m2_e0 | hetalt | 100.0000 | 100.0000 | 100.0000 | 97.9592 | 2 | 0 | 2 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | map_l250_m2_e1 | hetalt | 100.0000 | 100.0000 | 100.0000 | 98.0198 | 2 | 0 | 2 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | map_siren | hetalt | 96.7742 | 93.7500 | 100.0000 | 85.3760 | 105 | 7 | 105 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | segdup | hetalt | 96.7742 | 93.7500 | 100.0000 | 95.4455 | 45 | 3 | 46 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | segdupwithalt | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I1_5 | segdupwithalt | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I1_5 | segdupwithalt | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I1_5 | segdupwithalt | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I1_5 | tech_badpromoters | * | 100.0000 | 100.0000 | 100.0000 | 52.1739 | 22 | 0 | 22 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | tech_badpromoters | het | 100.0000 | 100.0000 | 100.0000 | 38.4615 | 8 | 0 | 8 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | tech_badpromoters | hetalt | 100.0000 | 100.0000 | 100.0000 | 50.0000 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I1_5 | tech_badpromoters | homalt | 100.0000 | 100.0000 | 100.0000 | 58.0645 | 13 | 0 | 13 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | HG002complexvar | hetalt | 95.1115 | 90.6787 | 100.0000 | 54.5670 | 1109 | 114 | 1149 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | HG002compoundhet | hetalt | 94.4942 | 89.5631 | 100.0000 | 28.5953 | 7646 | 891 | 7686 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | decoy | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | decoy | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | decoy | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | decoy | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | func_cds | * | 97.6190 | 95.3488 | 100.0000 | 38.8060 | 41 | 2 | 41 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | func_cds | het | 97.8723 | 95.8333 | 100.0000 | 37.8378 | 23 | 1 | 23 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | func_cds | hetalt | 85.7143 | 75.0000 | 100.0000 | 25.0000 | 3 | 1 | 3 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | func_cds | homalt | 100.0000 | 100.0000 | 100.0000 | 42.3077 | 15 | 0 | 15 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_AllRepeats_51to200bp_gt95identity_merged | hetalt | 91.3934 | 84.1509 | 100.0000 | 46.3584 | 446 | 84 | 464 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_AllRepeats_gt200bp_gt95identity_merged | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_AllRepeats_gt200bp_gt95identity_merged | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_AllRepeats_gt200bp_gt95identity_merged | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_AllRepeats_gt200bp_gt95identity_merged | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_51to200bp_gt95identity_merged | hetalt | 87.0370 | 77.0492 | 100.0000 | 53.9216 | 47 | 14 | 47 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt101bp_gt95identity_merged | het | 98.5673 | 97.1751 | 100.0000 | 71.3333 | 172 | 5 | 172 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt101bp_gt95identity_merged | hetalt | 96.3415 | 92.9412 | 100.0000 | 56.0440 | 79 | 6 | 80 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt51bp_gt95identity_merged | het | 99.1736 | 98.3607 | 100.0000 | 68.7500 | 120 | 2 | 120 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt51bp_gt95identity_merged | hetalt | 94.9495 | 90.3846 | 100.0000 | 57.8947 | 47 | 5 | 48 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_51to200bp_gt95identity_merged | hetalt | 91.6418 | 84.5730 | 100.0000 | 45.2055 | 307 | 56 | 320 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_gt200bp_gt95identity_merged | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_gt200bp_gt95identity_merged | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_gt200bp_gt95identity_merged | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_gt200bp_gt95identity_merged | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_SimpleRepeat_diTR_51to200 | * | 77.3333 | 63.0435 | 100.0000 | 56.3910 | 58 | 34 | 58 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | I6_15 | lowcmp_SimpleRepeat_diTR_51to200 | het | 77.7778 | 100.0000 | 7 | 2 | 0 | 0 | 0 | ||||