PrecisionFDA

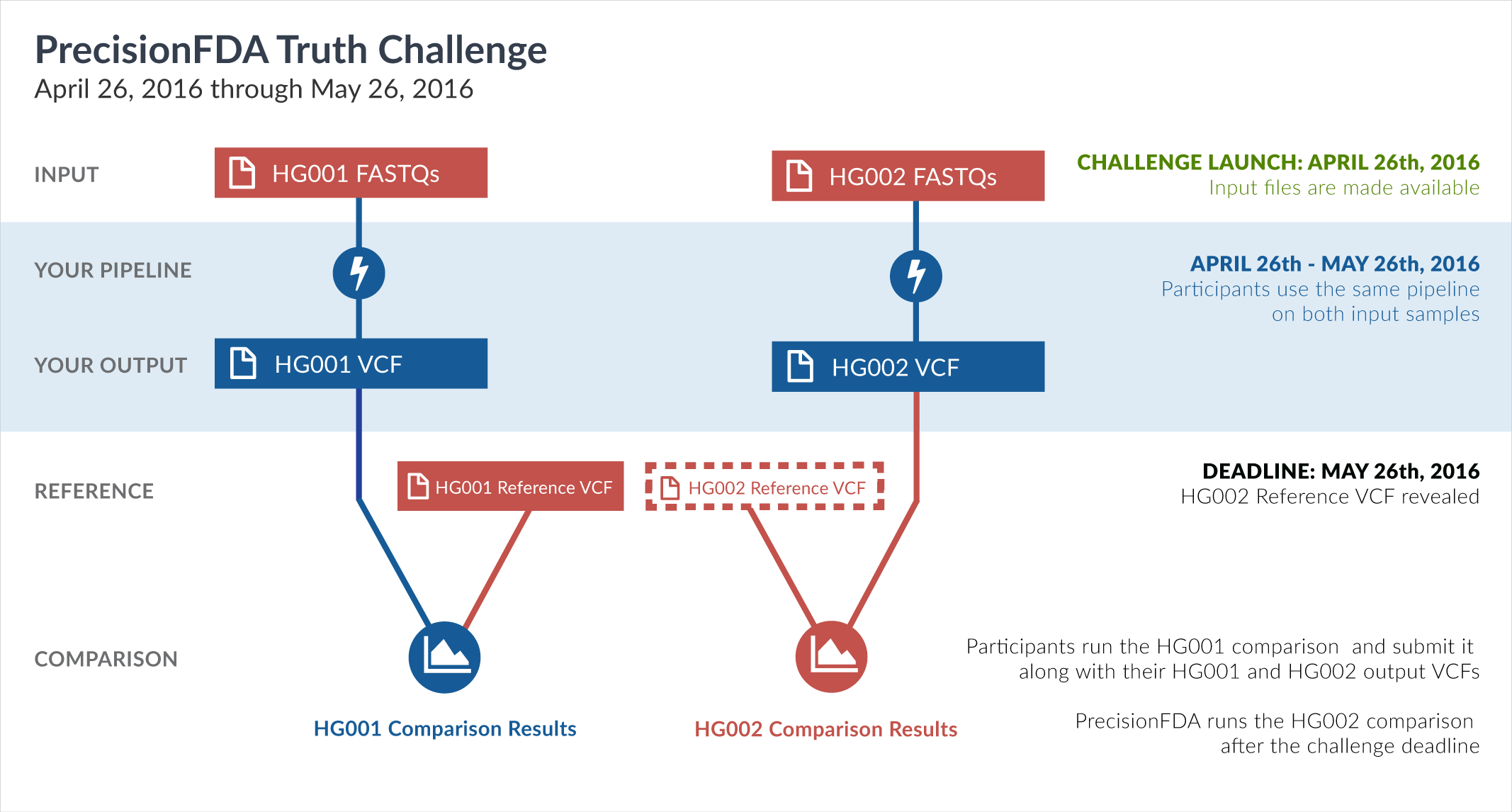

Truth Challenge

Engage and improve DNA test results with our community challenges

Explore HG002 comparison results

Use this interactive explorer to filter all results across submission entries and multiple dimensions.

| Entry | Type | Subtype | Subset | Genotype | F-score | Recall | Precision | Frac_NA | Truth TP | Truth FN | Query TP | Query FP | FP gt | % FP ma | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

81451-81500 / 86044 show all | |||||||||||||||

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_AllRepeats_lt51bp_gt95identity_merged | homalt | 95.6522 | 99.1803 | 92.3664 | 84.9771 | 121 | 1 | 121 | 10 | 10 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331 | hetalt | 96.1472 | 92.7162 | 99.8418 | 68.4316 | 611 | 48 | 631 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt101bp_gt95identity_merged | hetalt | 94.6139 | 90.0293 | 99.6904 | 56.8182 | 307 | 34 | 322 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt51bp_gt95identity_merged | hetalt | 94.8795 | 90.5594 | 99.6324 | 53.1842 | 259 | 27 | 271 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt51bp_gt95identity_merged | homalt | 91.6667 | 100.0000 | 84.6154 | 84.1463 | 22 | 0 | 22 | 4 | 4 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_all_gt95identity_merged | hetalt | 94.6120 | 90.0000 | 99.7222 | 61.7428 | 342 | 38 | 359 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_all_merged | hetalt | 96.1472 | 92.7162 | 99.8418 | 68.4316 | 611 | 48 | 631 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_SimpleRepeat_diTR_11to50 | hetalt | 94.5480 | 89.9642 | 99.6241 | 63.9077 | 251 | 28 | 265 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_SimpleRepeat_diTR_11to50 | homalt | 91.9540 | 97.5610 | 86.9565 | 86.2687 | 40 | 1 | 40 | 6 | 6 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_SimpleRepeat_homopolymer_6to10 | * | 96.8185 | 95.7447 | 97.9167 | 79.0393 | 45 | 2 | 47 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_SimpleRepeat_homopolymer_6to10 | homalt | 88.8889 | 100.0000 | 80.0000 | 89.5833 | 4 | 0 | 4 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | lowcmp_SimpleRepeat_quadTR_11to50 | homalt | 98.9011 | 100.0000 | 97.8261 | 81.7460 | 45 | 0 | 45 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I16_PLUS | map_siren | homalt | 97.6744 | 100.0000 | 95.4545 | 92.4138 | 21 | 0 | 21 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | * | hetalt | 95.4134 | 91.2372 | 99.9903 | 60.4923 | 10214 | 981 | 10271 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | HG002complexvar | hetalt | 94.9524 | 90.4403 | 99.9382 | 69.6987 | 1561 | 165 | 1618 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | HG002complexvar | homalt | 99.8477 | 99.8959 | 99.7995 | 52.5914 | 13434 | 14 | 13438 | 27 | 27 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | HG002compoundhet | hetalt | 95.4154 | 91.2409 | 99.9902 | 55.7998 | 10198 | 979 | 10253 | 1 | 1 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | HG002compoundhet | homalt | 70.9467 | 99.0881 | 55.2542 | 86.1176 | 326 | 3 | 326 | 264 | 264 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | lowcmp_AllRepeats_51to200bp_gt95identity_merged | homalt | 95.6822 | 97.8799 | 93.5811 | 68.4771 | 277 | 6 | 277 | 19 | 19 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | lowcmp_AllRepeats_lt51bp_gt95identity_merged | homalt | 99.3948 | 99.8304 | 98.9630 | 72.1359 | 3531 | 6 | 3531 | 37 | 37 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | lowcmp_Human_Full_Genome_TRDB_hg19_150331 | homalt | 99.0590 | 99.3619 | 98.7579 | 70.3448 | 3737 | 24 | 3737 | 47 | 47 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_51to200bp_gt95identity_merged | * | 97.3366 | 95.7143 | 99.0148 | 63.7284 | 603 | 27 | 603 | 6 | 6 | 100.0000 | |

| raldana-dualsentieon | INDEL | I1_5 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_51to200bp_gt95identity_merged | homalt | 98.2716 | 99.5000 | 97.0732 | 60.1167 | 199 | 1 | 199 | 6 | 6 | 100.0000 | |

| anovak-vg | INDEL | I1_5 | lowcmp_SimpleRepeat_diTR_51to200 | homalt | 0.0000 | 0.0000 | 25.0000 | 66.6667 | 0 | 0 | 1 | 3 | 3 | 100.0000 | |

| anovak-vg | INDEL | I1_5 | lowcmp_SimpleRepeat_triTR_51to200 | * | 12.7389 | 8.0000 | 31.2500 | 63.6364 | 2 | 23 | 5 | 11 | 11 | 100.0000 | |

| anovak-vg | INDEL | I1_5 | lowcmp_SimpleRepeat_triTR_51to200 | het | 0.0000 | 0.0000 | 41.6667 | 69.2308 | 0 | 2 | 5 | 7 | 7 | 100.0000 | |

| anovak-vg | INDEL | I1_5 | lowcmp_SimpleRepeat_triTR_51to200 | homalt | 0.0000 | 0.0000 | 20.0000 | 0 | 0 | 0 | 4 | 4 | 100.0000 | ||

| anovak-vg | INDEL | I1_5 | map_l250_m0_e0 | homalt | 66.9856 | 77.7778 | 58.8235 | 97.3725 | 7 | 2 | 10 | 7 | 7 | 100.0000 | |

| anovak-vg | INDEL | I1_5 | tech_badpromoters | het | 37.5000 | 25.0000 | 75.0000 | 69.2308 | 2 | 6 | 3 | 1 | 1 | 100.0000 | |

| anovak-vg | INDEL | I6_15 | lowcmp_SimpleRepeat_triTR_51to200 | * | 25.8065 | 15.3846 | 80.0000 | 37.5000 | 2 | 11 | 4 | 1 | 1 | 100.0000 | |

| anovak-vg | INDEL | I6_15 | lowcmp_SimpleRepeat_triTR_51to200 | het | 88.8889 | 100.0000 | 80.0000 | 28.5714 | 1 | 0 | 4 | 1 | 1 | 100.0000 | |

| anovak-vg | INDEL | I6_15 | map_l100_m0_e0 | homalt | 72.7273 | 75.0000 | 70.5882 | 81.1111 | 9 | 3 | 12 | 5 | 5 | 100.0000 | |

| anovak-vg | INDEL | I6_15 | map_l125_m0_e0 | homalt | 78.9474 | 83.3333 | 75.0000 | 87.8788 | 5 | 1 | 6 | 2 | 2 | 100.0000 | |

| anovak-vg | INDEL | I6_15 | tech_badpromoters | * | 57.1429 | 46.1538 | 75.0000 | 50.0000 | 6 | 7 | 6 | 2 | 2 | 100.0000 | |

| anovak-vg | INDEL | I6_15 | tech_badpromoters | homalt | 75.0000 | 100.0000 | 60.0000 | 54.5455 | 3 | 0 | 3 | 2 | 2 | 100.0000 | |

| anovak-vg | SNP | * | decoy | * | 0.0000 | 0.0000 | 50.0000 | 99.9991 | 0 | 0 | 1 | 1 | 1 | 100.0000 | |

| anovak-vg | SNP | * | decoy | het | 0.0000 | 0.0000 | 99.9995 | 0 | 0 | 0 | 1 | 1 | 100.0000 | ||

| anovak-vg | SNP | * | lowcmp_SimpleRepeat_triTR_51to200 | homalt | 50.0000 | 50.0000 | 50.0000 | 94.1176 | 1 | 1 | 1 | 1 | 1 | 100.0000 | |

| anovak-vg | SNP | * | tech_badpromoters | * | 89.4635 | 84.0764 | 95.5882 | 39.8230 | 132 | 25 | 130 | 6 | 6 | 100.0000 | |

| anovak-vg | SNP | * | tech_badpromoters | het | 84.5070 | 77.9221 | 92.3077 | 45.8333 | 60 | 17 | 60 | 5 | 5 | 100.0000 | |

| anovak-vg | SNP | * | tech_badpromoters | homalt | 94.1001 | 90.0000 | 98.5915 | 33.0189 | 72 | 8 | 70 | 1 | 1 | 100.0000 | |

| anovak-vg | SNP | ti | decoy | * | 0.0000 | 0.0000 | 50.0000 | 99.9986 | 0 | 0 | 1 | 1 | 1 | 100.0000 | |

| anovak-vg | SNP | ti | decoy | het | 0.0000 | 0.0000 | 99.9992 | 0 | 0 | 0 | 1 | 1 | 100.0000 | ||

| anovak-vg | SNP | ti | lowcmp_SimpleRepeat_diTR_51to200 | homalt | 83.3333 | 83.3333 | 83.3333 | 95.2381 | 5 | 1 | 5 | 1 | 1 | 100.0000 | |

| anovak-vg | SNP | ti | lowcmp_SimpleRepeat_triTR_11to50 | homalt | 97.9888 | 97.4071 | 98.5775 | 24.3272 | 1390 | 37 | 1386 | 20 | 20 | 100.0000 | |

| anovak-vg | SNP | ti | lowcmp_SimpleRepeat_triTR_51to200 | homalt | 50.0000 | 50.0000 | 50.0000 | 90.4762 | 1 | 1 | 1 | 1 | 1 | 100.0000 | |

| anovak-vg | SNP | ti | tech_badpromoters | * | 89.2841 | 83.5294 | 95.8904 | 38.1356 | 71 | 14 | 70 | 3 | 3 | 100.0000 | |

| anovak-vg | SNP | ti | tech_badpromoters | het | 83.5443 | 75.0000 | 94.2857 | 45.3125 | 33 | 11 | 33 | 2 | 2 | 100.0000 | |

| anovak-vg | SNP | ti | tech_badpromoters | homalt | 94.9679 | 92.6829 | 97.3684 | 29.6296 | 38 | 3 | 37 | 1 | 1 | 100.0000 | |

| anovak-vg | SNP | tv | decoy | * | 0.0000 | 0.0000 | 99.9988 | 0 | 0 | 0 | 1 | 1 | 100.0000 | ||