PrecisionFDA

Truth Challenge

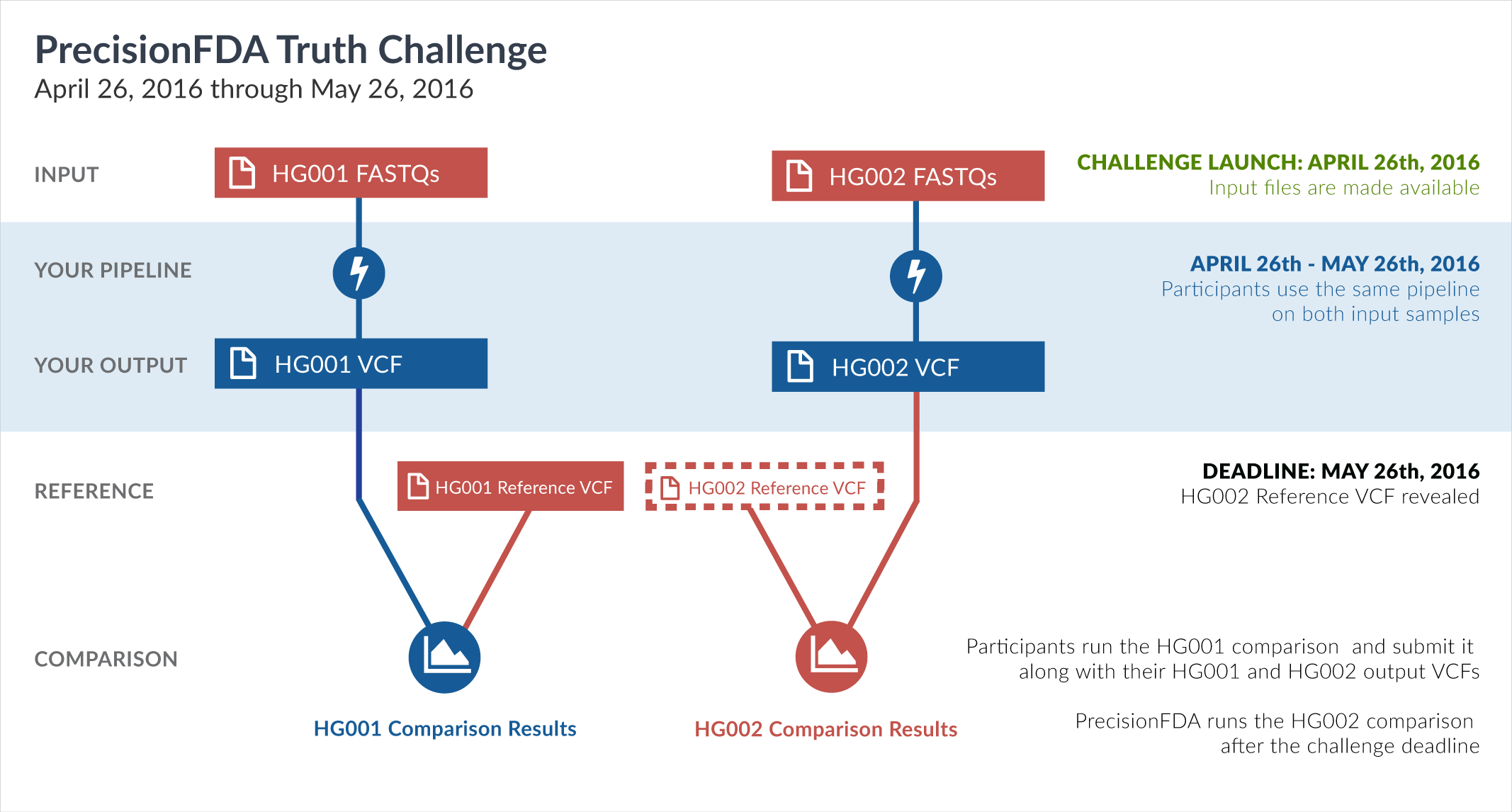

Engage and improve DNA test results with our community challenges

Explore HG002 comparison results

Use this interactive explorer to filter all results across submission entries and multiple dimensions.

| Entry | Type | Subtype | Subset | Genotype | F-score | Recall | Precision | Frac_NA | Truth TP | Truth FN | Query TP | Query FP | FP gt | % FP ma | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

1451-1500 / 86044 show all | |||||||||||||||

| raldana-dualsentieon | INDEL | * | lowcmp_SimpleRepeat_homopolymer_6to10 | hetalt | 97.6077 | 95.3271 | 100.0000 | 72.7898 | 510 | 25 | 514 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | lowcmp_SimpleRepeat_homopolymer_gt10 | het | 82.4324 | 70.1149 | 100.0000 | 99.9146 | 61 | 26 | 60 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | lowcmp_SimpleRepeat_homopolymer_gt10 | hetalt | 100.0000 | 100.0000 | 100.0000 | 99.8747 | 16 | 0 | 16 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | lowcmp_SimpleRepeat_quadTR_11to50 | hetalt | 98.3128 | 96.6816 | 100.0000 | 40.4561 | 2593 | 89 | 2611 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | lowcmp_SimpleRepeat_triTR_11to50 | hetalt | 99.0153 | 98.0498 | 100.0000 | 28.0159 | 905 | 18 | 907 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | lowcmp_SimpleRepeat_triTR_51to200 | hetalt | 96.2656 | 92.8000 | 100.0000 | 27.7778 | 116 | 9 | 117 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l100_m0_e0 | hetalt | 95.2381 | 90.9091 | 100.0000 | 88.7273 | 30 | 3 | 31 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l100_m1_e0 | hetalt | 93.5622 | 87.9032 | 100.0000 | 83.7758 | 109 | 15 | 110 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l100_m2_e0 | hetalt | 93.6170 | 88.0000 | 100.0000 | 84.7826 | 110 | 15 | 112 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l100_m2_e1 | hetalt | 93.5484 | 87.8788 | 100.0000 | 84.6354 | 116 | 16 | 118 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l125_m0_e0 | hetalt | 95.2381 | 90.9091 | 100.0000 | 94.2197 | 10 | 1 | 10 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l125_m1_e0 | hetalt | 94.7368 | 90.0000 | 100.0000 | 90.5013 | 36 | 4 | 36 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l125_m2_e0 | hetalt | 95.0000 | 90.4762 | 100.0000 | 91.2442 | 38 | 4 | 38 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l125_m2_e1 | hetalt | 93.8272 | 88.3721 | 100.0000 | 91.4607 | 38 | 5 | 38 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l150_m0_e0 | hetalt | 94.1176 | 88.8889 | 100.0000 | 94.0299 | 8 | 1 | 8 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l150_m1_e0 | hetalt | 92.3077 | 85.7143 | 100.0000 | 93.5943 | 18 | 3 | 18 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l150_m2_e0 | hetalt | 92.3077 | 85.7143 | 100.0000 | 94.3574 | 18 | 3 | 18 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l150_m2_e1 | hetalt | 90.4762 | 82.6087 | 100.0000 | 94.2424 | 19 | 4 | 19 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l250_m0_e0 | homalt | 97.9592 | 96.0000 | 100.0000 | 96.8545 | 24 | 1 | 24 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l250_m1_e0 | hetalt | 100.0000 | 100.0000 | 100.0000 | 96.0784 | 6 | 0 | 6 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l250_m2_e0 | hetalt | 100.0000 | 100.0000 | 100.0000 | 96.7391 | 6 | 0 | 6 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_l250_m2_e1 | hetalt | 100.0000 | 100.0000 | 100.0000 | 96.8421 | 6 | 0 | 6 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | map_siren | hetalt | 95.1168 | 90.6883 | 100.0000 | 85.1022 | 224 | 23 | 226 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | segdup | hetalt | 96.0000 | 92.3077 | 100.0000 | 93.7787 | 120 | 10 | 122 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | segdupwithalt | * | 100.0000 | 100.0000 | 100.0000 | 99.9969 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | segdupwithalt | het | 100.0000 | 100.0000 | 100.0000 | 99.9951 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | tech_badpromoters | * | 97.2973 | 94.7368 | 100.0000 | 53.8462 | 72 | 4 | 72 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | tech_badpromoters | het | 94.5946 | 89.7436 | 100.0000 | 50.7042 | 35 | 4 | 35 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | tech_badpromoters | hetalt | 100.0000 | 100.0000 | 100.0000 | 50.0000 | 4 | 0 | 4 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | * | tech_badpromoters | homalt | 100.0000 | 100.0000 | 100.0000 | 57.1429 | 33 | 0 | 33 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | HG002compoundhet | hetalt | 96.3719 | 92.9979 | 100.0000 | 26.0481 | 1793 | 135 | 1905 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | decoy | * | 100.0000 | 100.0000 | 100.0000 | 98.9565 | 6 | 0 | 6 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | decoy | het | 100.0000 | 100.0000 | 100.0000 | 98.9873 | 4 | 0 | 4 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | decoy | homalt | 100.0000 | 100.0000 | 100.0000 | 98.6486 | 2 | 0 | 2 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | func_cds | * | 95.6522 | 91.6667 | 100.0000 | 74.4186 | 11 | 1 | 11 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | func_cds | het | 93.3333 | 87.5000 | 100.0000 | 77.4194 | 7 | 1 | 7 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | func_cds | homalt | 100.0000 | 100.0000 | 100.0000 | 66.6667 | 4 | 0 | 4 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_AllRepeats_gt200bp_gt95identity_merged | het | 100.0000 | 100.0000 | 100.0000 | 99.7361 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_AllRepeats_gt200bp_gt95identity_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 91.6667 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_AllRepeats_lt51bp_gt95identity_merged | hetalt | 97.7075 | 95.5178 | 100.0000 | 35.7483 | 1236 | 58 | 1348 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_51to200bp_gt95identity_merged | hetalt | 95.2381 | 90.9091 | 100.0000 | 83.5443 | 10 | 1 | 13 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | het | 100.0000 | 100.0000 | 100.0000 | 99.7283 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_gt200bp_gt95identity_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 90.0000 | 1 | 0 | 1 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt101bp_gt95identity_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 71.6049 | 19 | 0 | 23 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt51bp_gt95identity_merged | hetalt | 100.0000 | 100.0000 | 100.0000 | 46.1538 | 12 | 0 | 14 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_lt51bp_gt95identity_merged | homalt | 100.0000 | 100.0000 | 100.0000 | 46.9849 | 211 | 0 | 211 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt51bp_gt95identity_merged | hetalt | 97.6139 | 95.3390 | 100.0000 | 30.9052 | 900 | 44 | 977 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_SimpleRepeat_diTR_11to50 | hetalt | 97.7169 | 95.5357 | 100.0000 | 32.6547 | 749 | 35 | 827 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_SimpleRepeat_diTR_51to200 | hetalt | 91.2020 | 83.8269 | 100.0000 | 33.9650 | 368 | 71 | 453 | 0 | 0 | ||

| raldana-dualsentieon | INDEL | D16_PLUS | lowcmp_SimpleRepeat_homopolymer_6to10 | hetalt | 100.0000 | 100.0000 | 100.0000 | 54.2857 | 12 | 0 | 16 | 0 | 0 | ||