PrecisionFDA

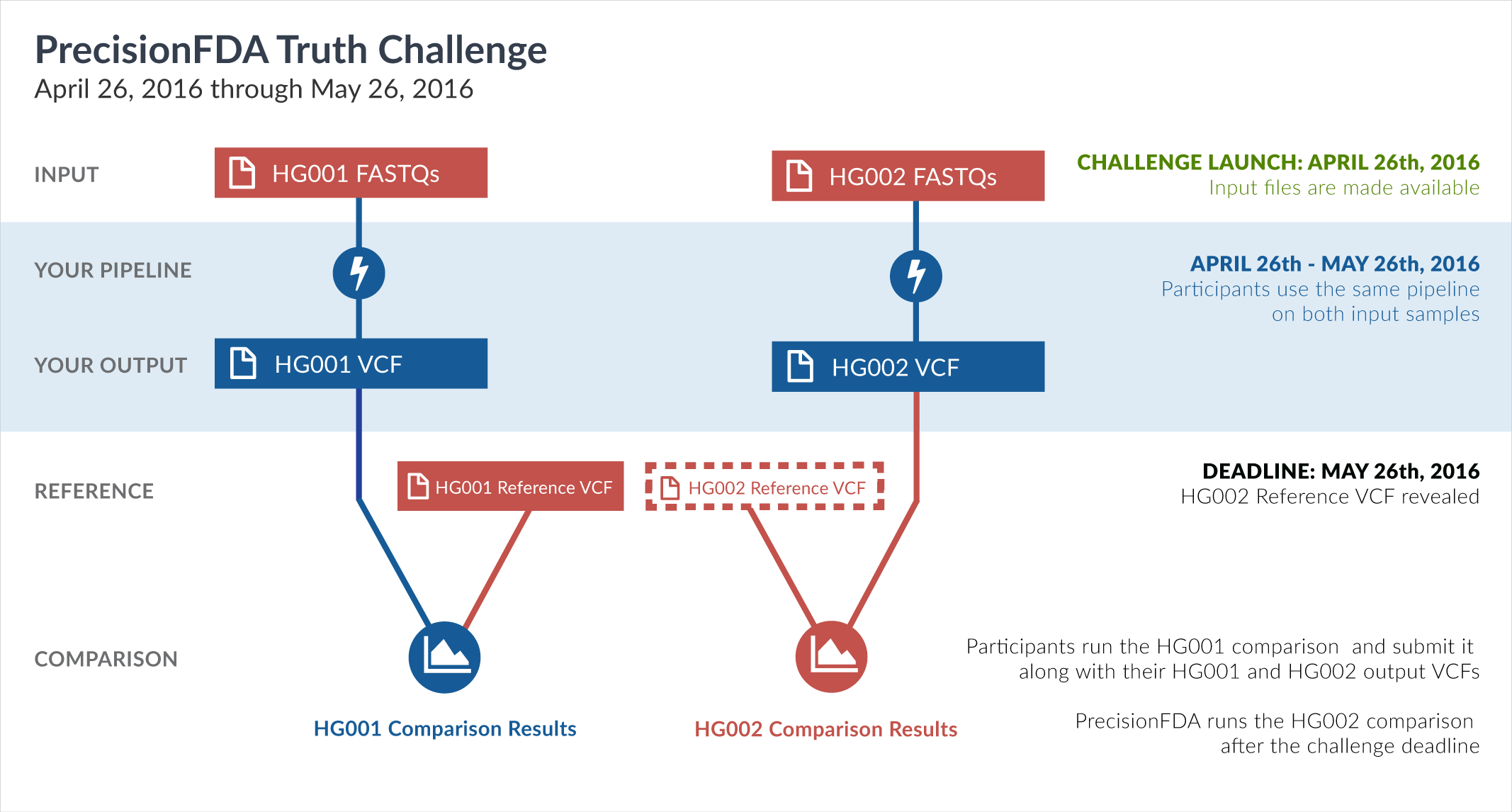

Truth Challenge

Engage and improve DNA test results with our community challenges

Explore HG002 comparison results

Use this interactive explorer to filter all results across submission entries and multiple dimensions.

| Entry | Type | Subtype | Subset | Genotype | F-score | Recall | Precision | Frac_NA | Truth TP | Truth FN | Query TP | Query FP | FP gt | % FP ma | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

22351-22400 / 86044 show all | |||||||||||||||

| gduggal-snapfb | INDEL | D1_5 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_51to200bp_gt95identity_merged | hetalt | 68.0898 | 55.0736 | 89.1626 | 33.2237 | 711 | 580 | 362 | 44 | 24 | 54.5455 | |

| asubramanian-gatk | INDEL | D1_5 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_all_merged | hetalt | 96.1607 | 93.4776 | 99.0024 | 33.2299 | 8685 | 606 | 8733 | 88 | 82 | 93.1818 | |

| asubramanian-gatk | INDEL | D1_5 | lowcmp_Human_Full_Genome_TRDB_hg19_150331 | hetalt | 96.1607 | 93.4776 | 99.0024 | 33.2299 | 8685 | 606 | 8733 | 88 | 82 | 93.1818 | |

| ckim-gatk | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt101bp_gt95identity_merged | hetalt | 96.5196 | 93.9394 | 99.2455 | 33.2429 | 1519 | 98 | 1710 | 13 | 13 | 100.0000 | |

| ckim-vqsr | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt101bp_gt95identity_merged | hetalt | 96.5196 | 93.9394 | 99.2455 | 33.2429 | 1519 | 98 | 1710 | 13 | 13 | 100.0000 | |

| ltrigg-rtg1 | INDEL | D6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_51to200bp_gt95identity_merged | hetalt | 92.0444 | 86.0070 | 98.9933 | 33.2437 | 1469 | 239 | 1475 | 15 | 15 | 100.0000 | |

| raldana-dualsentieon | SNP | ti | lowcmp_SimpleRepeat_quadTR_11to50 | homalt | 99.9875 | 100.0000 | 99.9749 | 33.2441 | 3987 | 0 | 3987 | 1 | 1 | 100.0000 | |

| ckim-isaac | INDEL | D6_15 | * | hetalt | 90.6237 | 83.9246 | 98.4851 | 33.2460 | 6860 | 1314 | 7281 | 112 | 99 | 88.3929 | |

| hfeng-pmm3 | INDEL | * | lowcmp_SimpleRepeat_diTR_11to50 | hetalt | 97.4355 | 95.0263 | 99.9701 | 33.2469 | 9954 | 521 | 10036 | 3 | 2 | 66.6667 | |

| ckim-isaac | INDEL | I6_15 | lowcmp_SimpleRepeat_diTR_11to50 | hetalt | 83.6839 | 72.6708 | 98.6312 | 33.2487 | 1287 | 484 | 1297 | 18 | 13 | 72.2222 | |

| asubramanian-gatk | INDEL | I6_15 | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt101bp_gt95identity_merged | hetalt | 95.2667 | 91.9260 | 98.8593 | 33.2487 | 2334 | 205 | 2860 | 33 | 31 | 93.9394 | |

| ckim-dragen | SNP | ti | lowcmp_SimpleRepeat_triTR_11to50 | het | 99.8389 | 99.9596 | 99.7185 | 33.2528 | 2477 | 1 | 2480 | 7 | 3 | 42.8571 | |

| hfeng-pmm2 | SNP | * | lowcmp_SimpleRepeat_triTR_11to50 | homalt | 99.9452 | 99.9635 | 99.9270 | 33.2602 | 2737 | 1 | 2737 | 2 | 1 | 50.0000 | |

| raldana-dualsentieon | INDEL | D6_15 | lowcmp_SimpleRepeat_triTR_11to50 | * | 99.3616 | 98.9595 | 99.7670 | 33.2686 | 1712 | 18 | 1713 | 4 | 3 | 75.0000 | |

| jlack-gatk | INDEL | * | lowcmp_SimpleRepeat_quadTR_51to200 | hetalt | 95.9073 | 92.8033 | 99.2261 | 33.2760 | 1109 | 86 | 1154 | 9 | 8 | 88.8889 | |

| gduggal-snapvard | INDEL | D6_15 | HG002compoundhet | * | 59.7955 | 51.4782 | 71.3183 | 33.2912 | 4649 | 4382 | 4896 | 1969 | 1719 | 87.3032 | |

| hfeng-pmm3 | INDEL | D16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRlt7_lt101bp_gt95identity_merged | hetalt | 96.5563 | 93.4447 | 99.8823 | 33.2941 | 1511 | 106 | 1697 | 2 | 2 | 100.0000 | |

| gduggal-bwavard | INDEL | I1_5 | * | homalt | 95.1435 | 90.7626 | 99.9687 | 33.2973 | 54846 | 5582 | 54343 | 17 | 11 | 64.7059 | |

| dgrover-gatk | INDEL | * | lowcmp_SimpleRepeat_diTR_11to50 | hetalt | 97.5627 | 95.6563 | 99.5467 | 33.3070 | 10020 | 455 | 10102 | 46 | 45 | 97.8261 | |

| ltrigg-rtg2 | SNP | ti | HG002compoundhet | * | 99.3879 | 98.9873 | 99.7918 | 33.3089 | 17301 | 177 | 17255 | 36 | 13 | 36.1111 | |

| gduggal-snapvard | SNP | * | func_cds | het | 99.2494 | 99.0323 | 99.4675 | 33.3113 | 11053 | 108 | 11020 | 59 | 21 | 35.5932 | |

| ckim-isaac | INDEL | I16_PLUS | HG002compoundhet | hetalt | 65.1901 | 48.5428 | 99.2149 | 33.3115 | 1016 | 1077 | 1011 | 8 | 7 | 87.5000 | |

| ckim-isaac | INDEL | D1_5 | lowcmp_SimpleRepeat_diTR_11to50 | * | 93.9157 | 91.6952 | 96.2464 | 33.3267 | 22502 | 2038 | 22436 | 875 | 753 | 86.0571 | |

| eyeh-varpipe | INDEL | D1_5 | lowcmp_SimpleRepeat_diTR_11to50 | * | 84.4279 | 81.5933 | 87.4666 | 33.3286 | 20023 | 4517 | 20601 | 2952 | 2912 | 98.6450 | |

| egarrison-hhga | INDEL | D16_PLUS | tech_badpromoters | * | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 4 | 0 | 4 | 0 | 0 | ||

| dgrover-gatk | INDEL | I1_5 | func_cds | hetalt | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 2 | 0 | 2 | 0 | 0 | ||

| ckim-isaac | INDEL | I6_15 | func_cds | * | 91.1392 | 83.7209 | 100.0000 | 33.3333 | 36 | 7 | 36 | 0 | 0 | ||

| ckim-isaac | INDEL | I6_15 | func_cds | hetalt | 66.6667 | 50.0000 | 100.0000 | 33.3333 | 2 | 2 | 2 | 0 | 0 | ||

| gduggal-snapvard | INDEL | I6_15 | lowcmp_SimpleRepeat_triTR_11to50 | homalt | 21.2121 | 11.8644 | 100.0000 | 33.3333 | 7 | 52 | 12 | 0 | 0 | ||

| ghariani-varprowl | INDEL | D16_PLUS | tech_badpromoters | * | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 4 | 0 | 4 | 0 | 0 | ||

| gduggal-snapfb | INDEL | I6_15 | func_cds | hetalt | 50.0000 | 50.0000 | 50.0000 | 33.3333 | 2 | 2 | 1 | 1 | 1 | 100.0000 | |

| gduggal-snapfb | INDEL | I6_15 | tech_badpromoters | hetalt | 80.0000 | 66.6667 | 100.0000 | 33.3333 | 2 | 1 | 2 | 0 | 0 | ||

| hfeng-pmm1 | INDEL | D16_PLUS | tech_badpromoters | * | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 4 | 0 | 4 | 0 | 0 | ||

| ghariani-varprowl | INDEL | I6_15 | func_cds | homalt | 88.8889 | 80.0000 | 100.0000 | 33.3333 | 12 | 3 | 12 | 0 | 0 | ||

| hfeng-pmm3 | INDEL | D16_PLUS | tech_badpromoters | * | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 4 | 0 | 4 | 0 | 0 | ||

| hfeng-pmm3 | INDEL | I1_5 | func_cds | hetalt | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 2 | 0 | 2 | 0 | 0 | ||

| jlack-gatk | INDEL | I1_5 | func_cds | hetalt | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 2 | 0 | 2 | 0 | 0 | ||

| hfeng-pmm1 | INDEL | I1_5 | func_cds | hetalt | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 2 | 0 | 2 | 0 | 0 | ||

| hfeng-pmm2 | INDEL | D16_PLUS | tech_badpromoters | * | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 4 | 0 | 4 | 0 | 0 | ||

| hfeng-pmm2 | INDEL | I1_5 | func_cds | hetalt | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 2 | 0 | 2 | 0 | 0 | ||

| hfeng-pmm2 | INDEL | I6_15 | lowcmp_SimpleRepeat_triTR_51to200 | hetalt | 95.6522 | 91.6667 | 100.0000 | 33.3333 | 11 | 1 | 12 | 0 | 0 | ||

| ckim-dragen | INDEL | I1_5 | func_cds | hetalt | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 2 | 0 | 2 | 0 | 0 | ||

| ckim-dragen | INDEL | I6_15 | lowcmp_SimpleRepeat_triTR_51to200 | hetalt | 95.6522 | 91.6667 | 100.0000 | 33.3333 | 11 | 1 | 12 | 0 | 0 | ||

| cchapple-custom | INDEL | D16_PLUS | tech_badpromoters | * | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 4 | 0 | 4 | 0 | 0 | ||

| cchapple-custom | INDEL | I6_15 | func_cds | homalt | 96.7742 | 100.0000 | 93.7500 | 33.3333 | 15 | 0 | 15 | 1 | 1 | 100.0000 | |

| ciseli-custom | INDEL | D1_5 | tech_badpromoters | het | 60.0000 | 75.0000 | 50.0000 | 33.3333 | 6 | 2 | 6 | 6 | 2 | 33.3333 | |

| gduggal-snapfb | INDEL | C1_5 | lowcmp_SimpleRepeat_quadTR_51to200 | het | 0.0000 | 0.0000 | 33.3333 | 0 | 0 | 0 | 2 | 0 | 0.0000 | ||

| gduggal-bwaplat | INDEL | D16_PLUS | lowcmp_SimpleRepeat_triTR_51to200 | homalt | 86.9565 | 76.9231 | 100.0000 | 33.3333 | 10 | 3 | 10 | 0 | 0 | ||

| gduggal-bwafb | INDEL | I16_PLUS | func_cds | het | 61.5385 | 44.4444 | 100.0000 | 33.3333 | 4 | 5 | 4 | 0 | 0 | ||

| gduggal-bwafb | INDEL | I16_PLUS | tech_badpromoters | homalt | 100.0000 | 100.0000 | 100.0000 | 33.3333 | 2 | 0 | 2 | 0 | 0 | ||