PrecisionFDA

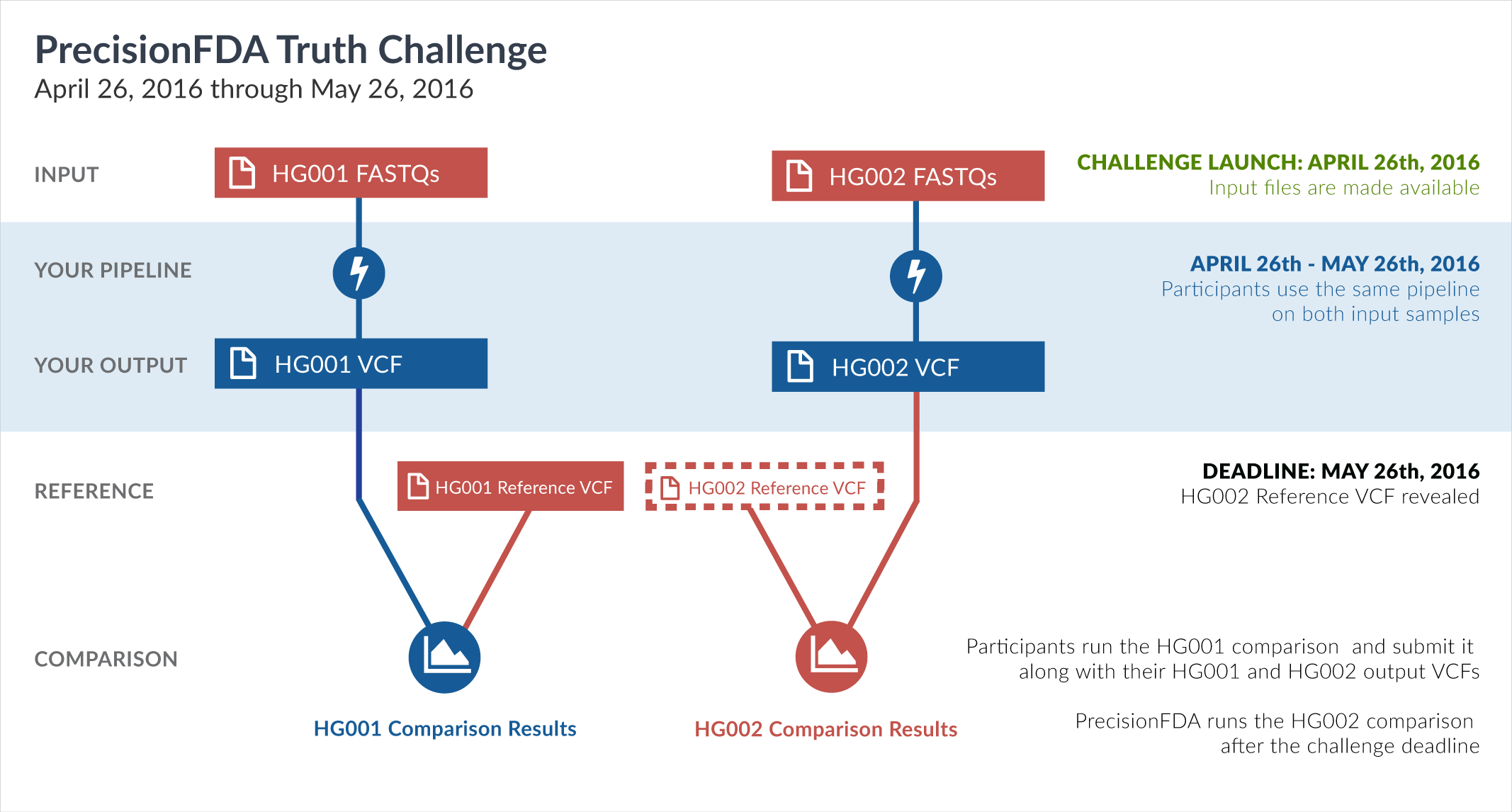

Truth Challenge

Engage and improve DNA test results with our community challenges

Explore HG002 comparison results

Use this interactive explorer to filter all results across submission entries and multiple dimensions.

| Entry | Type | Subtype | Subset | Genotype | F-score | Recall | Precision | Frac_NA | Truth TP | Truth FN | Query TP | Query FP | FP gt | % FP ma | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

72501-72550 / 86044 show all | |||||||||||||||

| ltrigg-rtg2 | INDEL | D6_15 | segdup | het | 97.7502 | 97.8261 | 97.6744 | 92.4495 | 90 | 2 | 84 | 2 | 0 | 0.0000 | |

| ltrigg-rtg2 | INDEL | D6_15 | segdup | hetalt | 96.8421 | 93.8776 | 100.0000 | 90.1468 | 46 | 3 | 47 | 0 | 0 | ||

| ltrigg-rtg2 | INDEL | D6_15 | segdup | homalt | 98.9899 | 98.0000 | 100.0000 | 89.5966 | 49 | 1 | 49 | 0 | 0 | ||

| ltrigg-rtg2 | INDEL | D6_15 | segdupwithalt | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | D6_15 | segdupwithalt | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | D6_15 | segdupwithalt | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | D6_15 | segdupwithalt | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | D6_15 | tech_badpromoters | * | 100.0000 | 100.0000 | 100.0000 | 50.0000 | 17 | 0 | 17 | 0 | 0 | ||

| ltrigg-rtg2 | INDEL | D6_15 | tech_badpromoters | het | 100.0000 | 100.0000 | 100.0000 | 44.4444 | 10 | 0 | 10 | 0 | 0 | ||

| ltrigg-rtg2 | INDEL | D6_15 | tech_badpromoters | hetalt | 100.0000 | 100.0000 | 100.0000 | 66.6667 | 1 | 0 | 1 | 0 | 0 | ||

| ltrigg-rtg2 | INDEL | D6_15 | tech_badpromoters | homalt | 100.0000 | 100.0000 | 100.0000 | 53.8462 | 6 | 0 | 6 | 0 | 0 | ||

| ltrigg-rtg2 | INDEL | I16_PLUS | * | * | 92.6024 | 87.3765 | 98.4933 | 47.8680 | 5572 | 805 | 5491 | 84 | 71 | 84.5238 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | * | het | 93.4104 | 88.2634 | 99.1949 | 47.8107 | 2399 | 319 | 2341 | 19 | 7 | 36.8421 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | * | hetalt | 90.2131 | 82.6025 | 99.3685 | 50.0717 | 1733 | 365 | 1731 | 11 | 11 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | * | homalt | 94.2470 | 92.2486 | 96.3340 | 45.0988 | 1440 | 121 | 1419 | 54 | 53 | 98.1481 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | HG002complexvar | * | 92.2852 | 87.0130 | 98.2375 | 52.4691 | 1139 | 170 | 1059 | 19 | 16 | 84.2105 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | HG002complexvar | het | 91.9911 | 86.1654 | 98.6616 | 46.5235 | 573 | 92 | 516 | 7 | 4 | 57.1429 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | HG002complexvar | hetalt | 89.8660 | 82.0896 | 99.2701 | 60.2899 | 275 | 60 | 272 | 2 | 2 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | HG002complexvar | homalt | 95.2945 | 94.1748 | 96.4413 | 53.1667 | 291 | 18 | 271 | 10 | 10 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | HG002compoundhet | * | 88.6244 | 82.1745 | 96.1730 | 41.5559 | 1761 | 382 | 1734 | 69 | 67 | 97.1014 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | HG002compoundhet | het | 69.4745 | 61.7021 | 79.4872 | 79.6875 | 29 | 18 | 31 | 8 | 7 | 87.5000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | HG002compoundhet | hetalt | 90.2840 | 82.6087 | 99.5316 | 37.2520 | 1729 | 364 | 1700 | 8 | 8 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | HG002compoundhet | homalt | 10.1695 | 100.0000 | 5.3571 | 67.2515 | 3 | 0 | 3 | 53 | 52 | 98.1132 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | decoy | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | I16_PLUS | decoy | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | I16_PLUS | decoy | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | I16_PLUS | decoy | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | I16_PLUS | func_cds | * | 90.9091 | 83.3333 | 100.0000 | 50.0000 | 10 | 2 | 10 | 0 | 0 | ||

| ltrigg-rtg2 | INDEL | I16_PLUS | func_cds | het | 94.1176 | 88.8889 | 100.0000 | 42.8571 | 8 | 1 | 8 | 0 | 0 | ||

| ltrigg-rtg2 | INDEL | I16_PLUS | func_cds | hetalt | 0.0000 | 0.0000 | 0.0000 | 0 | 1 | 0 | 0 | 0 | |||

| ltrigg-rtg2 | INDEL | I16_PLUS | func_cds | homalt | 100.0000 | 100.0000 | 100.0000 | 66.6667 | 2 | 0 | 2 | 0 | 0 | ||

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_51to200bp_gt95identity_merged | * | 77.5746 | 65.5340 | 95.0355 | 74.5946 | 135 | 71 | 134 | 7 | 5 | 71.4286 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_51to200bp_gt95identity_merged | het | 75.5891 | 62.1053 | 96.5517 | 74.3363 | 59 | 36 | 56 | 2 | 0 | 0.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_51to200bp_gt95identity_merged | hetalt | 81.4336 | 70.9302 | 95.5882 | 72.4696 | 61 | 25 | 65 | 3 | 3 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_51to200bp_gt95identity_merged | homalt | 70.9091 | 60.0000 | 86.6667 | 81.7073 | 15 | 10 | 13 | 2 | 2 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_gt200bp_gt95identity_merged | * | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_gt200bp_gt95identity_merged | het | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_gt200bp_gt95identity_merged | hetalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_gt200bp_gt95identity_merged | homalt | 0.0000 | 100.0000 | 0 | 0 | 0 | 0 | 0 | ||||

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_lt51bp_gt95identity_merged | * | 91.3456 | 85.6266 | 97.8831 | 68.3472 | 977 | 164 | 971 | 21 | 20 | 95.2381 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_lt51bp_gt95identity_merged | het | 91.4566 | 84.8624 | 99.1620 | 69.5837 | 370 | 66 | 355 | 3 | 2 | 66.6667 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_lt51bp_gt95identity_merged | hetalt | 90.9728 | 84.5626 | 98.4344 | 65.7965 | 493 | 90 | 503 | 8 | 8 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_AllRepeats_lt51bp_gt95identity_merged | homalt | 92.6496 | 93.4426 | 91.8699 | 73.4341 | 114 | 8 | 113 | 10 | 10 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331 | * | 87.8760 | 79.8693 | 97.6669 | 68.5714 | 1222 | 308 | 1214 | 29 | 21 | 72.4138 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331 | het | 86.4541 | 77.3273 | 98.0237 | 68.7461 | 515 | 151 | 496 | 10 | 2 | 20.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331 | hetalt | 90.2206 | 83.1563 | 98.5965 | 64.1509 | 548 | 111 | 562 | 8 | 8 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331 | homalt | 84.7522 | 77.5610 | 93.4132 | 77.6139 | 159 | 46 | 156 | 11 | 11 | 100.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_51to200bp_gt95identity_merged | * | 75.2215 | 62.0370 | 95.5224 | 69.6833 | 67 | 41 | 64 | 3 | 2 | 66.6667 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_51to200bp_gt95identity_merged | het | 74.7296 | 60.6061 | 97.4359 | 66.9492 | 40 | 26 | 38 | 1 | 0 | 0.0000 | |

| ltrigg-rtg2 | INDEL | I16_PLUS | lowcmp_Human_Full_Genome_TRDB_hg19_150331_TRgt6_51to200bp_gt95identity_merged | hetalt | 77.6119 | 66.6667 | 92.8571 | 66.6667 | 12 | 6 | 13 | 1 | 1 | 100.0000 | |